Methods Section Feedback in thesify

If you are looking for methods section feedback, you are usually trying to answer the same question reviewers ask: does your Methods section include enough detail to evaluate what you did, and enough transparency to understand how your results were produced?

thesify’s methods feedback report, designed for scientific papers, is a rubric-based report that reviews your Methods section across Experimental Design, Detail and Replicability, and Research Question Alignment. It flags under-specified design choices, missing operational detail, unclear analysis pathways, and weak access statements, then provides revision-oriented suggestions you can apply.

In this post, we unpack what you can expect from the Methods report itself, how the rubric is organised on the page, and what the feedback looks like when thesify flags common issues, such as unclear sampling and eligibility logic, missing procedural decision rules, under-specified analysis plans, incomplete tool and version reporting, and weak access statements for data, materials, or code. You will also leave with a methods section checklist aligned to the rubric, so you can sanity-check your draft before submission.

If you want the broader context for thesify’s section-level feedback across Methods, Results, and Discussion, see Introducing In-Depth Methods, Results, and Discussion Feedback in thesify

Methods Section Feedback Rubric Categories in thesify

thesify’s methods section feedback is delivered as a rubric-based report organised into three main categories:

Experimental Design

Detail and Replicability

Research Question Alignment.

The Methods report is organised into three expandable rubric categories, shown in a fixed scan order.

Each category expands into sub-categories so you can see what the rubric is checking, where key information is missing or under-specified, and what the report suggests you revise.

Importantly, thesify does not stop at flagging gaps. Under each main category, the report also provides concrete revision suggestions, meaning prompts that tell you what to clarify, specify, or document so your Methods section is easier to evaluate in peer review and easier to verify for reproducibility.

Experimental Design Rubric Category

This category checks whether your Methods section is evaluable for peer review. You will see sub-categories that reflect your study type and design logic. The report typically checks whether a reviewer can understand:

what design you are using and what it can support

how you constructed the sample, corpus, or dataset, including the rules used

what procedures produced the data you later analyse

what analysis plan is specified, including key decisions and checks

What the suggestions typically ask you to do: make the study logic explicit, for example by stating the design and unit of analysis clearly, specifying inclusion and exclusion logic, and documenting procedural decision rules that shape the dataset.

Detail and Replicability Rubric Category

This category checks whether your Methods section is verifiable, meaning a reader can follow what you did without guessing missing steps. The sub-categories typically focus on whether you have documented:

decision rules and operational definitions that shape the data

tools, versions, settings, or parameters that affect outputs

materials, instruments, codebooks, scripts, or workflows that underpin the Methods

a clear access statement for data, materials, or code, including constraints when sharing is limited

What the suggestions typically ask you to do: add the operational detail a reviewer needs to audit your workflow, including the tool and version information that materially affects results, and clear statements about where key materials can be accessed.

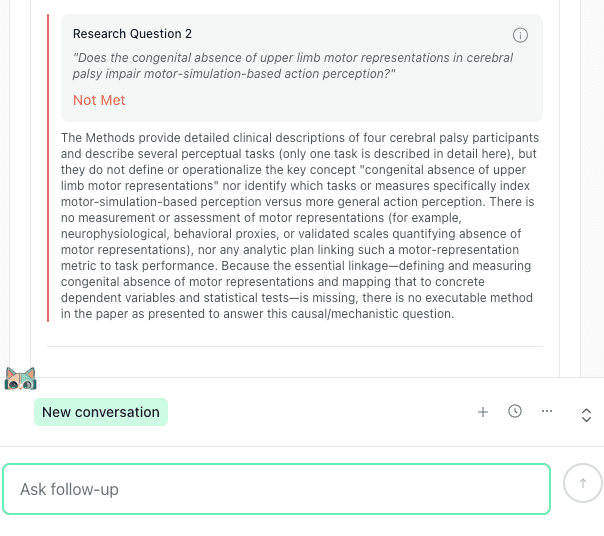

Research Question Alignment Rubric Category

This category checks whether your Methods section can actually answer your stated research questions. It looks for a traceable path from question to inputs to analysis, including:

which data sources, measures, or constructs correspond to each question

which analysis steps produce the outputs you report for each question

where mapping language is missing, ambiguous, or implied rather than stated

What the suggestions typically ask you to do: add explicit mapping language so a reviewer can see, without inference, how each research question is operationalised and which analytic steps generate the results you present.

Scan Order for Methods Section Review

The report appears in a fixed sequence in thesify. If you want to read it quickly without losing the thread, use the same order:

Experimental Design, confirm the design logic is clear and evaluable.

Detail and Replicability, add the operational detail that supports verification.

Research Question Alignment, make the question-to-method mapping explicit.

In the next sections, we unpack each category in the same sequence you see in thesify, starting with Experimental Design, where the expandable checks vary by study type.

Experimental Design Feedback in thesify’s Methods Rubric

In thesify’s methods section feedback, Experimental Design is where the report evaluates whether your Methods section is evaluable in peer review terms. This is the category that focuses on methodological logic, not phrasing. It asks, in effect, whether a knowledgeable reader can reconstruct what you did, why that design fits your question, and how your design choices constrain what you can claim.

Just as importantly, Experimental Design is where thesify applies a study-type lens. The expandable checks you see under this category change depending on what kind of Methods section you are writing, for example an empirical quantitative study, an empirical qualitative study, or an evidence synthesis or review. That is deliberate, because the standards reviewers use to assess “rigor” and “transparency” are not identical across designs.

When you expand Experimental Design in thesify, you will see a study-type label and a set of rubric checks (each rated and explained) that reflect what a reviewer needs to evaluate the design logic.

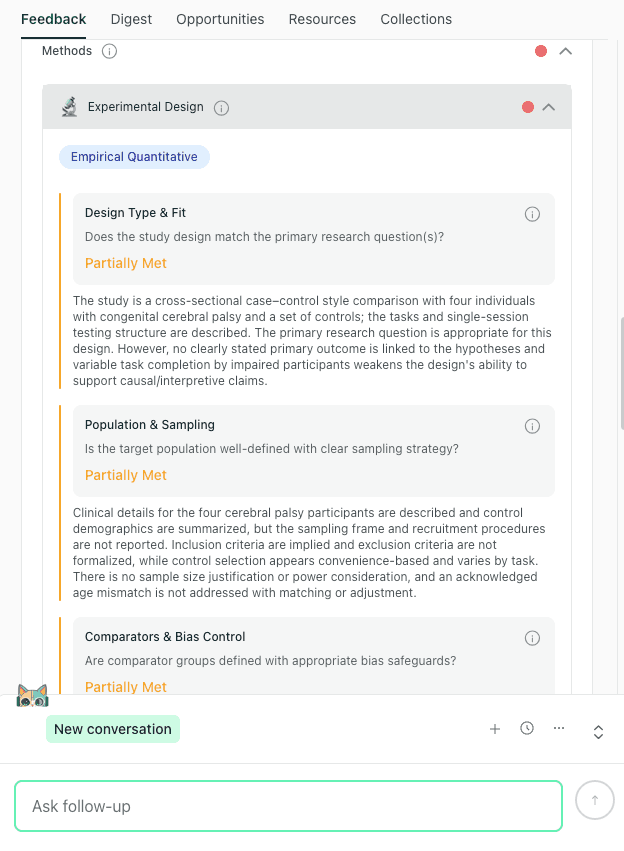

Example of the Experimental Design category in thesify’s Methods feedback, with expandable rubric checks and revision-focused notes.

How thesify Tailors Experimental Design Checks by Study Type

When you open Experimental Design, you will see sub-rubrics that reflect the logic of your study type. The report is still recognisably “Methods section review,” but the checks emphasise different details. For example,

In empirical quantitative Methods, the report tends to prioritise design type and fit, population and sampling definition, comparator and bias control (where relevant), and an explicit analysis and endpoint plan.

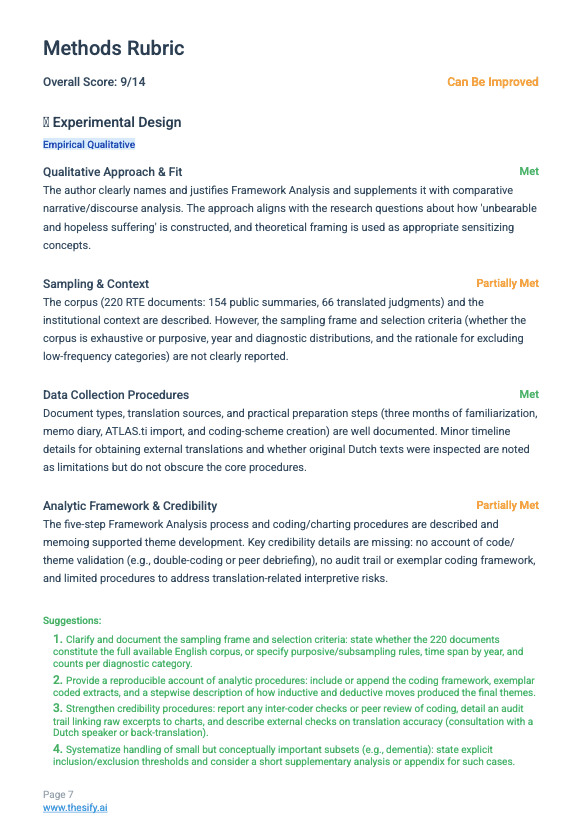

In empirical qualitative Methods, the report tends to prioritise sampling logic, data generation context, analytic approach specification, and credibility procedures.

In evidence synthesis and review Methods, the report tends to prioritise search strategy transparency, screening and eligibility decision rules, extraction procedures, synthesis approach, and study quality or bias appraisal where relevant.

This tailoring keeps the feedback aligned with how reviewers evaluate Methods across different designs.

Core Experimental Design Checks You Will Typically See

Regardless of study type, the Experimental Design category usually works across four methodological questions. The labels will vary by design, but the underlying checks are consistent.

1) Design Statement and Fit

You will often see feedback when a design is named but not operationalised. The report prompts you to make explicit:

what design you are using, with a concrete design statement (not only a label)

what the design can support (and what it cannot)

what the unit of analysis is, and what timeframe or setting boundaries apply

what outcomes, endpoints, or constructs are central to the design (when applicable)

2) Sampling, Eligibility, and Context

This is where Methods sections frequently lose reviewers, particularly when inclusion logic is implied rather than stated. thesify’s feedback commonly pushes for clarity on:

the sampling frame or corpus construction logic

inclusion and exclusion criteria, stated as decision rules

recruitment, selection, and exclusion stages (including post hoc exclusions)

context that matters for interpretation, such as setting, population constraints, or case definitions

3) Procedures and Operational Definitions

Experimental Design feedback often appears when procedures are described as “standard,” “as usual,” or conceptually, but not in a way that a reader can trace. The report typically checks for:

a stepwise description of what happened, in what order

decision rules and thresholds that shape what data are produced or retained

operational definitions for key categories, variables, or classification schemes

documentation of deviations, stopping rules, or protocol changes where relevant

4) Analysis Plan Clarity

Even strong Methods sections can be flagged here if the analysis is described at too high a level. The report commonly prompts you to specify:

how the analysis produces the outputs you present in Result

primary versus secondary analyses, or planned versus exploratory components

key modelling or coding decisions that shape interpretation

credibility checks appropriate to the design (for example, validation procedures, audit trail practices, or robustness checks, depending on method)

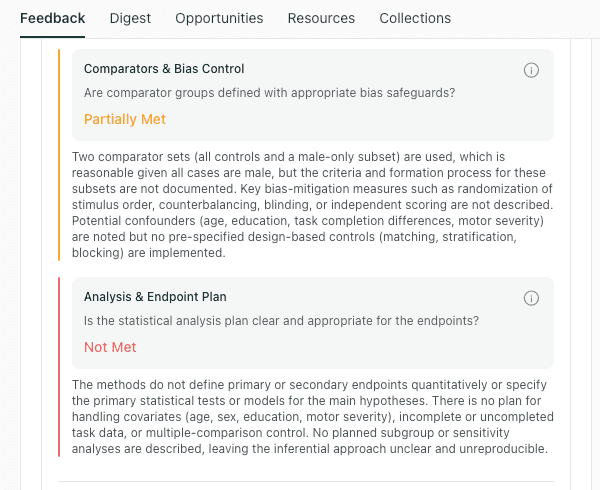

Example of Experimental Design sub-checks in thesify, including comparator and bias safeguards and analysis plan clarity.

Empirical Qualitative Methods: What thesify Commonly Checks

The exported report mirrors the on-page rubric breakdown, which helps when you are revising with co-authors.

For empirical qualitative Methods sections, Experimental Design feedback tends to focus on whether your analytic logic is transparent and whether credibility procedures are stated in a way a reviewer can assess. You can typically expect checks that press you to clarify:

Sampling strategy and rationale, including how participants, cases, or texts were selected and what “enough” meant for your design

Data generation context, including setting, timeframe, and the conditions under which data were produced (interviews, observations, documents, and so on)

Analytic approach specification, including how you moved from data to coding to themes, categories, or claims, with clear decision points

Credibility procedures, including what you did to support trustworthiness (for example, double-coding logic, disagreement handling, audit trail practices, triangulation, or reflexive documentation), stated in procedural rather than aspirational terms

Empirical Quantitative Methods: What thesify Commonly Checks

Experimental Design expands into design-specific checks so you can see what a reviewer will look for first.

For empirical quantitative Methods sections, Experimental Design feedback tends to focus on whether the study design is specified in a way a reviewer can evaluate internal validity and analytic adequacy. You can typically expect checks that press you to clarify:

Design type and fit, including what the design can support and what it cannot support given the research question framing

Population and sampling, including recruitment or selection logic, inclusion and exclusion criteria stated as decision rules, and sample size rationale (power, sensitivity, or an equivalent justification)

Comparators and bias control, including how comparator groups are defined, whether confounding and baseline differences are handled, and what safeguards exist (randomisation, counterbalancing, blinding, matching, stratification, or statistical adjustment, depending on design)

Analysis and endpoint plan, including primary versus secondary endpoints, planned models or tests, covariates, missingness handling, and any multiple-comparison or sensitivity logic

Evidence Synthesis and Review Methods: What thesify Commonly Checks

For evidence synthesis or review Methods sections, Experimental Design feedback tends to focus on whether your pipeline is reconstructable and defensible, meaning a reader could understand how evidence entered the review and how it was synthesised. You can typically expect checks that press you to specify:

Search strategy transparency, including databases, time limits, search strings or query logic, and any constraints that shape recall

Screening and eligibility decision rules, including inclusion and exclusion criteria and how screening was executed across stages

Extraction and coding procedures, including what was extracted, how decisions were recorded, and how disagreements were resolved

Synthesis approach, including how studies were combined (qualitative synthesis, quantitative synthesis, narrative synthesis) and how heterogeneity or comparability was handled

Quality or bias appraisal, where relevant to your design, including what was assessed and how those assessments affected synthesis decisions

The tone of this feedback is typically, “make your review pipeline auditable, and make your inclusion logic defensible.”

If you are already dealing with Methods critiques from reviewers, the same prioritisation strategy applies as in a response letter. Fix what blocks evaluation first, then document the checks and limits that prevent overclaiming. The workflow in How to Respond to Journal Reviewer Comments and Conflicting Reports is a helpful reference for handling Methods-focused critique without letting revisions sprawl.

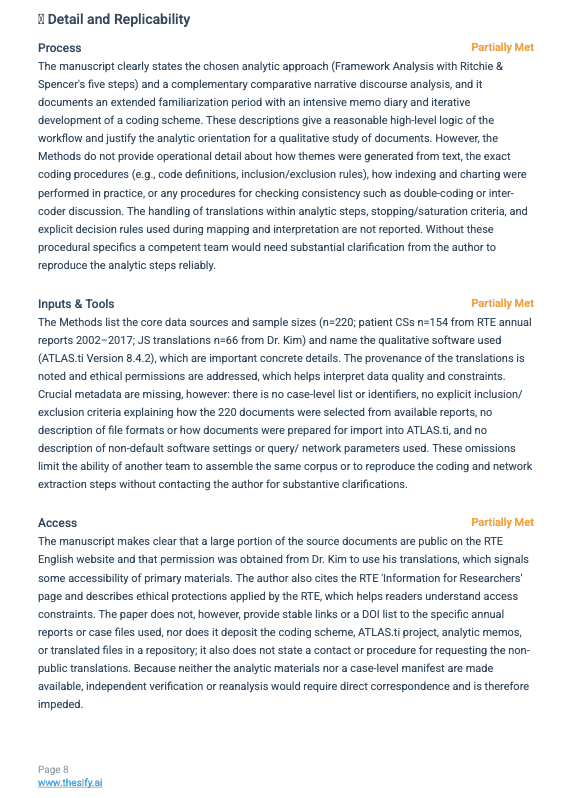

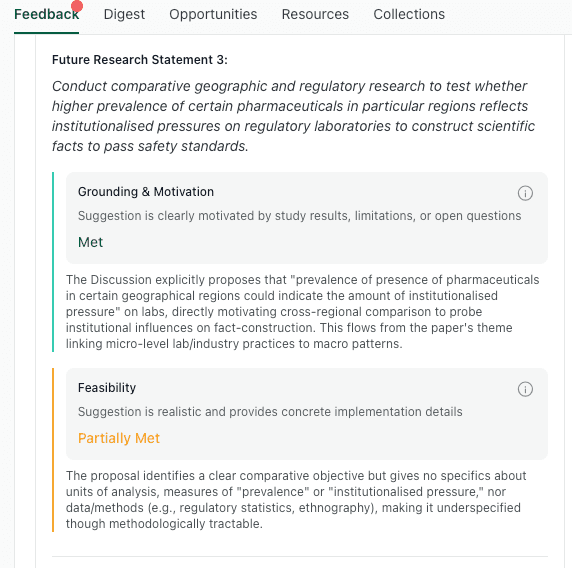

Detail and Replicability Feedback in thesify’s Methods Rubric

If “Experimental Design” is about whether your Methods can be evaluated, Detail and Replicability is about whether it can be verified. This is the part of thesify’s methods section feedback that tends to catch strong studies with under-specified workflows, where a reviewer’s main concern is not the design choice itself, but whether the paper documents what was done with enough operational clarity to trust the outputs.

In thesify, this category follows a consistent structure: Process, Inputs and Tools, and Access.

Detail and Replicability is structured into Process, Inputs and Tools, and Access so you can diagnose what is missing and where.

Process: The Operational Workflow Behind Your Methods

Detail and Replicability in thesify breaks down into Process and Inputs and Tools, each with its own rubric rating and notes.

This subsection focuses on what happened, in what order, and under what decision rules. thesify often flags Methods drafts that describe a workflow at a high level but omit the operational details that determine what ends up in the analysis set.

What you can expect thesify to offer feedback on here:

Decision rules and thresholds

For example, cutoffs for exclusions, stopping rules, screening thresholds, or coding decisions that determine whether an item is included.Preprocessing and data handling steps

Steps that materially shape results, such as cleaning rules, transformations, aggregation logic, or how ambiguous cases were treated.Protocol-level traceability

Whether a reader can reconstruct the sequence of steps without guessing, including what was done manually versus automatically, and where judgement calls occurred.

A useful test is whether your Methods allow a competent reader to recreate the pipeline on their own material. If the answer is “only if they already know our internal workflow,” this is where thesify tends to flag gaps.

Inputs And Tools: What You Used, Exactly

This subsection focuses on whether the tools and inputs are specified in a way that makes the Methods interpretable and reproducible. Many Methods sections mention software or instruments in passing, but omit the details that reviewers need to evaluate the reliability of what was produced.

thesify’s feedback here commonly focuses on:

Software, versions, and packages

Not just naming a tool, but documenting versions and key dependencies where they affect outputs.Settings and parameters

Defaults and parameter choices that materially change results, such as model settings, filters, algorithm thresholds, or configuration choices.Measures, instruments, and materials

Enough detail to evaluate measurement quality, such as item wording or scale structure where relevant, translation or validation steps, and the precise form of any stimuli or prompts used.

In evidence synthesis work, this subsection also extends naturally to “inputs” like database selection, limits applied, and extraction templates, because those are functionally the tools that define what evidence enters the pipeline.

Access: Can a Reader Verify the Underlying Artifacts?

The “Access” subsection focuses on whether there is a credible pathway for verification, even when full open sharing is not possible. Reviewers increasingly look for stable references to the underlying artifacts that allow them to assess whether your Methods were carried out as described.

thesify tends to flag Methods sections that:

Provide a general data availability statement without stable identifiers, such as DOIs, repository links, or persistent URLs.

Refer to materials as “available on request,” without specifying what will be shared, under what constraints, and how a reader would request it.

Omit access paths for items that are central to evaluation, such as codebooks, scripts, search strategies, screening logs, or extraction forms (for review-type studies).

Example of the Access check in thesify’s Methods rubric, including copy-ready revision suggestions to strengthen availability statements.

This does not mean every project must publish everything. It means the Methods should state what exists, where it lives, and what access constraints apply, so that verification is possible within the limits of ethics, privacy, or licensing.

After the rubric checks, thesify provides concrete revision suggestions. These are phrased as specific documentation tasks, so you can translate a “partially met” check into edits you can apply immediately.

Each rubric category includes revision suggestions that tell you what to clarify or document to close the highest-impact gaps.

If you want a clean way to export this rubric breakdown and share it with co-authors during revisions, thesify’s downloadable feedback report workflow is designed for exactly that handoff.

Research Question Alignment Feedback

A Methods section can be detailed and still fail a basic reviewer test: does it clearly explain how the study’s questions are answered. That is what thesify’s Research Question Alignment category is designed to check. It evaluates whether the Methods section connects your research questions to the data you collected, the variables or constructs you defined, and the analysis steps you planned to run.

This category is particularly useful because “alignment” problems often show up in peer review as vague comments, such as “methods do not match aims,” “unclear operationalization,” or “analysis does not address the research questions.” thesify surfaces those issues in a more direct way.

Research Question Alignment flags when a question cannot be answered from the Methods as written, and explains what link is missing.

Mapping Research Questions to Measures, Data Sources, and Variables

In this subsection, thesify’s methods section review focuses on whether each research question has identifiable inputs. In other words, can a reader tell what information is being used to answer each question, and how that information is defined.

Common alignment gaps thesify flags include:

Key variables or constructs are implied, not defined

The Methods refer to concepts (for example, trust, exposure, severity, adherence) without stating how they were measured, coded, or classified.Data sources are not tied to specific questions

The paper lists datasets, participants, documents, or instruments, but it is unclear which source supports which research question.Operational definitions are incomplete

Readers cannot tell what counts as a case, a category membership, or a positive event, which undermines interpretation and replicability.

What thesify typically encourages you to do is to add explicit definitions and short mapping language, so the reader can see, for each question, what is being measured and where the measure comes from.

Mapping Research Questions to Analysis Steps

The second alignment check focuses on whether the analysis described in Methods actually answers the questions posed. This is often where Methods sections become hard to evaluate, because the analysis plan is present but not anchored to the questions that motivated it.

Common gaps thesify flags include:

A methods description that lists analyses, but not their purpose

Tests, models, or coding procedures are named without stating which research question each one addresses.Mismatch between question type and analysis type

For example, a question framed as causal or explanatory is paired with an analysis that can only support association, or a question framed as comparative is paired with analysis steps that never specify the comparison set.Unclear primary versus secondary analyses

The Methods include many analyses but do not indicate which ones are confirmatory versus exploratory, or which outcome is primary.

A straightforward fix, and one thesify often implicitly prompts, is to add one or two sentences that link each research question to its corresponding analytic step. This can be as simple as a mapping line in the Methods section, for example: “To address RQ1, we did X using Y,” followed by what outcome or coded construct that analysis produces.

Each rubric check includes concrete suggestions that translate “alignment” into specific Methods edits

If you want a quick template for writing research questions with cleaner variable logic, see How to Write a Research Hypothesis: Steps & Examples, which is useful when alignment problems start upstream in how the questions are formulated.

Methods Section Checklist Based on thesify’s Rubric

Use this as a fast pre-flight before you run the Methods report, or as a guardrail after you revise. Each item is phrased as a yes or no check, because that is how reviewers typically judge Methods clarity during methods section review.

Experimental Design

Can you state your study design in one sentence, including the unit of analysis and setting or timeframe?

Is it clear what the design can support, and what it cannot?

Are your primary outcomes, endpoints, or focal constructs explicitly identified?

Is your comparison logic explicit, including groups, conditions, or timepoints, if applicable?

Is your sampling frame or corpus construction logic stated in plain terms?

Are inclusion and exclusion criteria written as decision rules, not implied in narrative?

Do you specify when exclusions happened, and why?

Are data generation procedures described in a clear sequence, from input to analyzable material?

Detail and Replicability

Do you document decision rules, thresholds, and operational definitions that shape what is included?

Are preprocessing, cleaning, transformation, or aggregation steps described clearly enough to follow?

Do you specify how ambiguous cases, missingness, or outliers were handled, where relevant?

Are your tools and software named with versions, and key packages or dependencies where they matter?

Do you report settings or parameters that materially affect outputs?

Are instruments, measures, templates, or codebooks described with enough specificity to evaluate and reproduce their use?

Do you include a clear access statement for data, materials, code, or supporting documentation, including constraints when sharing is limited?

Research Question Alignment

For each research question, can a reader identify the data source or material used to answer it?

For each research question, can a reader locate the measure, variable, or coded construct that operationalises it?

For each research question, is the analysis step that produces the reported output stated explicitly?

Are any causal or explanatory claims appropriately limited by the design described in your Methods?

If you are revising a full thesis chapter or manuscript section-by-section, you may find it helpful to use a short revision loop rather than trying to fix everything in one pass. The workflow in How to Improve Your Thesis Chapters Before Submission: 7-Step AI Feedback Guide pairs well with this checklist.

thesify’s Methods Section Feedback FAQ

What Is Methods Section Feedback?

Methods section feedback is a structured evaluation of whether your Methods section contains enough information for a reader to assess how the study was conducted and how results were produced. In practice, it focuses on design clarity, sampling and eligibility rules, data collection procedures, analysis planning, and replicability details.

How Detailed Should a Methods Section Be for Reproducibility?

A Methods section should be detailed enough that a competent researcher could verify what you did without guessing key steps. That usually means you specify decision rules, thresholds, exclusions, tool settings, and the exact workflow that turns raw inputs into reported results. The level of detail varies by discipline, but the standard is the same: remove ambiguity that changes interpretation or outcomes.

What Does a Methods Rubric Typically Evaluate?

A Methods rubric typically evaluates three broad areas:

Whether the experimental design is appropriate and described clearly (design type, sampling, procedures, analysis plan)

Whether the Methods include enough detail and replicability information (process steps, tools and settings, access to materials)

Whether the Methods align with the research questions, meaning each question can be traced to measures, data sources, and analysis steps

What Should Be Included in a Methods Section Checklist?

A strong methods section checklist usually covers:

Design type, unit of analysis, timeframe, outcomes or endpoints

Sampling frame, recruitment, inclusion and exclusion criteria, sample size rationale

Data collection procedures and operational definitions

Analysis plan, credibility checks, and missing-data handling where relevant

Tools, versions, parameter choices, and access to key materials (data, codebooks, scripts, search strategies)

If you want a complementary checklist for what happens after the Methods is ready and you are preparing for journal submission, see Submitting a Paper to an Academic Journal: A Practical Guide.

How Do I Report Sampling and Inclusion Criteria Clearly?

Write sampling and eligibility information so a reader can answer three questions quickly:

Who or what was eligible, and under what rules?

How were eligible cases identified and selected?

What exclusions were applied, and at what stage?

You do not need long prose, but you do need explicit criteria, plus a short rationale for why those criteria fit your research question.

What Credibility Checks Do Reviewers Expect in Qualitative Methods?

Reviewers typically expect you to describe how you ensured analytic trustworthiness. Depending on your approach, that may include an audit trail, double-coding or independent coding, disagreement resolution, reflexive memos, triangulation, or clear documentation of how codes and themes were generated and refined. The key is to show the reader how interpretive steps were managed, not just that analysis “was conducted.”

What Should I Include About Software, Tools, and Versions?

At minimum, include the software and version, plus any packages or dependencies that materially shape your results. You should also document key settings or parameters where defaults can change outputs. This applies to statistical software, qualitative analysis tools, and review-management pipelines.

How Do I Share Materials If I Cannot Share Data Publicly?

If data cannot be shared, state the constraint clearly (ethics, privacy, licensing), then offer the strongest verification path available. That may include controlled access procedures, de-identified metadata, codebooks, analysis scripts, synthetic data, or detailed appendices that allow others to audit the workflow.

Next Steps: Where to Apply Methods Feedback in Your Revision Pipeline

Once you have a clear picture of what thesify is flagging in your Methods section, the most productive next move is to decide where that feedback sits in your broader revision sequence. Methods issues tend to cascade, because unclear design choices affect what you can claim in the Results and how you interpret those results in the Discussion.

A practical way to use Methods section feedback without letting revisions sprawl is to treat it as a short pipeline with a clear stopping rule.

Run a Two-Pass Methods Revision

Pass 1: Fix What Blocks Evaluation

Start with items that prevent a reviewer from understanding what you did.

Design type and fit statements that are missing or too vague

Eligibility rules, sampling frame, and the logic of comparisons

The analysis pathway from inputs to outputs (including credibility checks)

Pass 2: Add What Enables Verification

Then fill in the details that prevent “insufficient detail” reviewer comments.

Decision rules and thresholds that shape the dataset or analytic set

Tools, versions, and settings where outputs depend on configuration

Access statements for materials, codebooks, scripts, or search strategies

If you want to apply the same rubric-based approach beyond Methods, start with the section-level overview of how thesify handles Methods, Results, and Discussion feedback in one workflow, then move through the sections that are most likely to be queried in peer review.

For a lightweight way to keep your revisions staged and defensible, the checklist in tW: Build a Review Pipeline For Your Work pairs well with this approach, because it helps you decide what to fix now, what to defer, and what to document as a limitation.

Try Methods Section Feedback on Your Draft

Create a free thesify account, upload your manuscript as a Scientific Paper, and review the Methods rubric results to see where your design, sampling, procedures, analysis plan, and replicability details are under-specified. Then revise and re-run the Methods report to confirm you have closed the highest-impact gaps before submission.

Related Posts

Methods, Results, and Discussion Feedback for Papers: If you are revising a scientific paper, the Methods, Results, and Discussion sections are where peer review often becomes concrete. Reviewers focus less on whether the topic is interesting and more on whether the study can be understood, evaluated, and trusted from what you wrote. Learn what reviewers look for in Methods, Results, and Discussion, and how section-level feedback helps you fix design, reporting, and interpretation gaps.

How to Use thesify’s Downloadable Feedback Report for Pre‑Submission Success: With more universities encouraging the use of AI writing tools, a new challenge emerges: how to document machine‑generated feedback in a way that is transparent and easy to share. This is where AI feedback report PDFs come in. thesify, an AI‑supported tool for researchers and students, doesn’t just highlight typos or suggest synonyms. thesify produces a structured report you can download as a PDF and keep beside your draft while revising.

How to Get Table and Figure Feedback with thesify: Tables and figures are often where peer review gets concrete. Reviewers may accept your framing and methods, then still request “major revisions” because a key table is hard to interpret, a figure is not referenced at the right moment, or the caption does not explain what the reader should conclude. Learn to improve tables and figures in your manuscript. This guide shows how thesify provides structured feedback, exportable reports, and co-author collaboration.