High-Impact Grant Writing for 2026 Review Panels

A high-impact grant writing strategy for 2026 review panels starts with one goal, make your application easy to score under cognitive load. Polished writing and sound ideas are baseline expectations. Reviewers triage fast, and proposals lose points when the logic is hard to track, even when the prose is clear.

In this post, you will apply a reviewer-first Signal-to-Noise framework to strengthen your grant proposal feedback loop, using a specific aims page template as the main case study. This involves identifying exactly where your logic is hard to track before a panel ever sees your draft.

Use a structured logic check to ensure your narrative remains consistent from the first page to the last.

You will learn how to reduce reviewer fatigue through structural alignment, how to close the gap between aims and impact with an Evidence Loop, and how to avoid AI-sounding prose by stress-testing logic rather than generating text. You will finish with five last-hour checks that catch common scoring problems before submission.

The Burden of Choice: Solving for Reviewer Fatigue

In this section, you will learn how reviewer fatigue shows up in grant peer review. The same cognitive hurdles identified in What Reviewers Notice First In Your Paper apply here: just as titles and abstracts shape a reader's snap judgment of a manuscript, you must identify what “cognitive friction” looks like on a grant proposal page so you can remove it before a panel ever sees your draft

Why Reviewer Fatigue Changes Grant Scoring

Grant peer review is an evaluation task under constraint. Studies of grant reviewing describe reviewers juggling competing responsibilities, time pressure, and uncertainty about how to translate critique into scores. Disagreement is common, even when reviewers receive the same instructions.

When reviewers cannot evaluate your grant proposal quickly, they default to safer interpretations. That does not mean they are careless, it means they have to make decisions with incomplete information. The result is predictable critique language that sounds stylistic but is usually structural:

“The rationale is unclear.”

“The aims are diffuse.”

“Feasibility is not demonstrated.”

“The approach lacks sufficient detail for evaluation.”

What “Cognitive Friction” Looks Like In A Grant Proposal

Cognitive friction is the moment a reviewer has to stop and reconstruct your logic. Common triggers in a grant proposal include:

Your Specific Aims do not match your Significance claims (the problem and stakes shift between sections).

Aim statements describe activities, but not testable outcomes (the reviewer cannot tell what “success” means).

The approach is plausible, but the sequence is not legible (no clear staging, dependencies, or decision points).

Impact language is ambitious, but the evidence trail is thin (claims outrun what the work can show).

Your title is descriptive but lacks a memorable "hook" or acronym that helps a tired reviewer maintain focus across multiple sections.

View of thesify providing specific recommendations to improve grant title clarity and memorability for better reviewer engagement. Use the title section to create a memorable anchor for reviewers, reducing the effort required to recall your project during panel discussions.

The Alignment Rule That Reduces Reviewer Effort

To survive the initial scan, your grant proposal structure has to line up across sections. Use this simple alignment rule:

Each Aim must map to one Significance claim (why this aim matters).

Each Aim must map to one Approach logic chain (how you will test it).

Each Aim must map to one measurable endpoint (what you will deliver, observe, or decide).

If a reviewer cannot trace Aim 1 to a matching significance statement and a concrete outcome, you have created noise. That noise invites conservative scoring because the work is harder to evaluate quickly.

A Quick Self-Check Before You Move On

Before you revise sentence-level style in your grant proposal, do this structural check:

Can you state each aim in one sentence, without qualifiers?

Can you name the primary outcome or decision point for each aim?

Can you point to the exact sentence in Significance that motivates each aim?

Can you explain feasibility in one line (resources, timeline logic, or prior capability)?

If you want a structured way to separate missing components from hard-to-evaluate components, start by distinguishing completeness gaps versus clarity gaps. A high-level overview can quickly flag entire sections—like Budget or Deliverables—that may have been overlooked during the drafting process.

Overview of thesify flagging missing mandatory sections in a grant proposal to ensure a complete and scorable submission. A quick "Completeness Check" helps you catch missing mandatory sections before they lead to an automatic low score.

Bridging The Logic Gap: From Specific Aims To Measurable Impact

In this section, you will turn “interesting science” into scorable impact by linking each aim in your grant proposal to a concrete outcome, a feasible approach, and a funder-facing payoff that a reviewer can verify quickly.

What Reviewers Actually Score When They Say “Impact”

Reviewers rarely mean “impact” in the abstract. They are reacting to whether your grant proposal makes it easy to judge significance and approach (and, for some schemes, additional criteria), without filling in missing logic themselves. NIH guidance on common application weaknesses is blunt about this, problems often show up as unclear significance, an under-justified approach, or feasibility that is not demonstrated.

A common failure mode is that your grant proposal aims read like activities, while your impact reads like outcomes. That mismatch creates the exact critique language you are trying to avoid (“diffuse aims,” “unclear rationale,” “overly ambitious plan”). Addressing these "completeness gaps" ensures you aren't leaving it to the reviewer to fill in your project's logic.

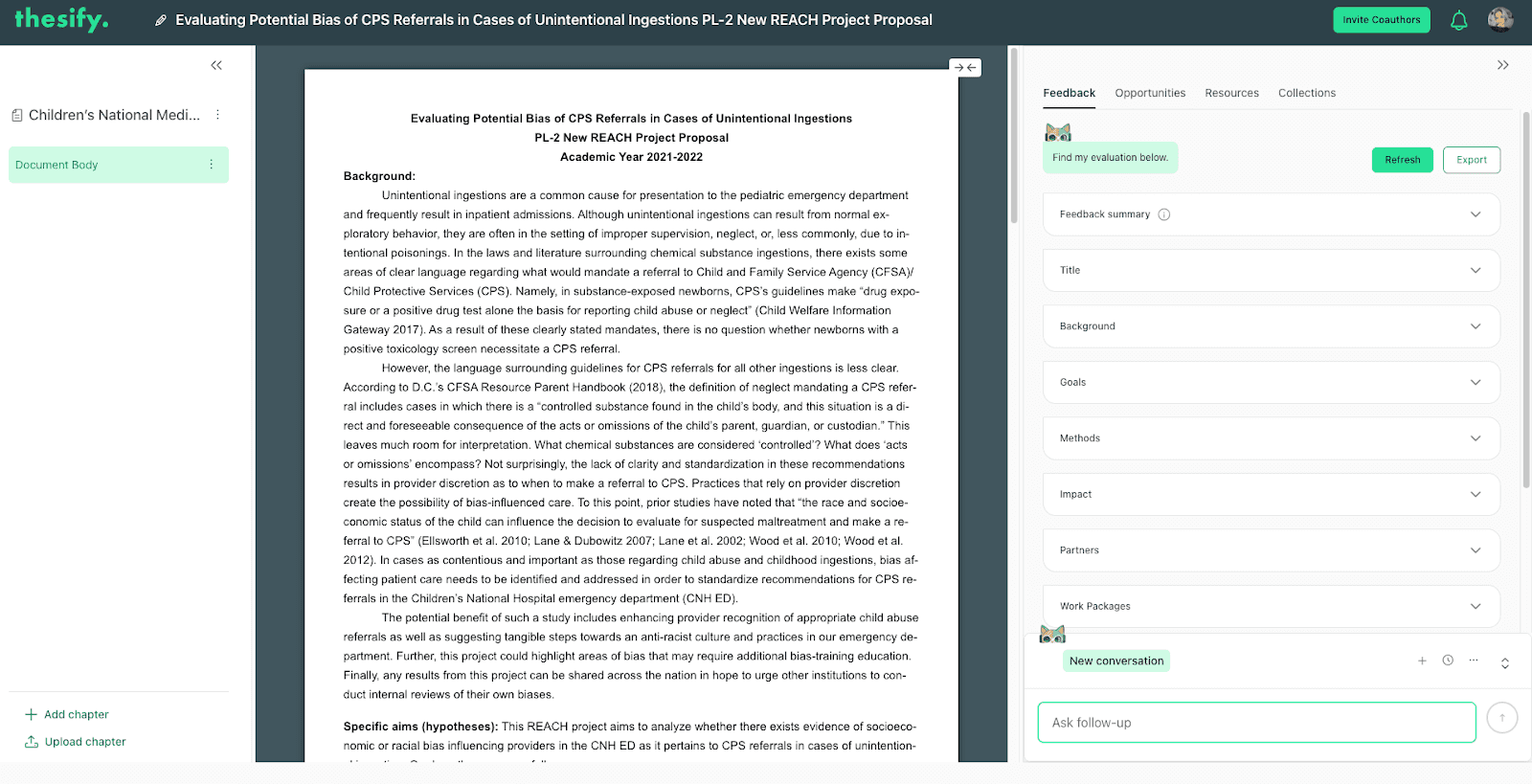

Example of thesify identifying missing elements in a grant proposal and providing concrete recommendations to turn activities into measurable outcomes.

Build A Closed Evidence Loop (So Claims Stay Testable)

Use a closed Evidence Loop to keep every claim in your grant proposal tethered to something measurable:

Hypothesis (Testable Claim): A single sentence you can evaluate within the project period (for many grants, this is commonly framed as a 3–5 year plan, but requirements vary by sponsor and scheme).

Specific Aim (Decision Point): What you will decide, estimate, or demonstrate (not just what you will “explore”).

Approach (Direct Test): The minimum methods needed to test the aim, plus the key validation step. To build reviewer trust, move beyond simple activity descriptions and apply the principles from Write So Others Can Verify to create concise, verifiable, and replicable methodological details within your proposal.

Deliverable (Observable Output): Dataset, model, protocol, tool, or effect estimate that a reviewer can picture.

Impact (Bounded Payoff): What changes if the deliverable is true, and for whom, aligned to sponsor priorities. For example, the NSF PAPPG now places heavier emphasis on "Broader Impacts" and data transparency.

This loop reduces two common rejection triggers: grant proposals that leave key study elements underspecified, and proposals that omit information reviewers need to score feasibility and approach.

Impact becomes legible when you translate it into sponsor-facing criteria. If you claim a downstream benefit, your Evidence Loop should visibly support who benefits and what "success" looks like.

Use thesify’s Grant Finder to ensure your project's logic matches the specific priorities and mission of your target sponsor.

Rewrite Your Aims So They Produce Measurable Outcomes

Most “logic gaps” show up in your grant proposal aim sentence itself. Here are two quick rewrites you can model.

Before (Activity-Based):

“Aim 1: Investigate how X influences Y in Z.”After (Outcome-Based, Scorable):

“Aim 1: Test whether X changes Y in Z compared with [control/standard], using [method], with success defined as [primary metric/threshold] by [timepoint].”

Before (Scope Drift):

“Aim 2: Characterize mechanisms and develop an intervention.”After (Sequenced, Feasible):

“Aim 2: Identify the mechanism most predictive of Y in Z (pre-specified criteria), then validate the top candidate in an independent sample to justify the intervention in a follow-on study.”

This is the same logic you use when you stress-test a Results section for whether conclusions follow from evidence. If you apply the "one-line" test from Do Your Results Fit 1 Line? to your proposal, you can ensure your intended impact follows logically and clearly from your specific aims.

Map “Impact” To Sponsor Language (Example: NSF Broader Impacts And Data Sharing)

Impact becomes legible in a grant proposal when you translate it into sponsor-facing criteria and required components. For NSF proposals, Broader Impacts and data sharing expectations are baked into how proposals are reviewed.

NSF’s guidance notes that the Data Management and Sharing Plan is a required supplementary document, and it is reviewed as an integral part of the proposal, considered under Intellectual Merit or Broader Impacts (as appropriate).

Practical implication: if you claim downstream benefit (broader impacts, usability, transparency), your Evidence Loop should visibly support it (who benefits, what is shared, how it will be used, what “success” looks like).

Quick Alignment Check (Do This Before You Polish)

Each Specific Aim in your grant proposal can be stated in one sentence with a clear outcome (not a topic area).

Each aim has one primary metric, deliverable, or decision rule a reviewer can point to.

Each aim maps to one Significance claim (same problem, same stakes, same population or system).

Your approach includes at least one feasibility cue reviewers look for (controls, validation, power, timeline logic).

Using AI Tools For Pre-Submission Grant Proposal Review

In this section, you will learn how to use AI tools in grant writing without losing your voice, risking confidentiality, or creating originality problems, while still catching the errors that derail proposals late in the process.

Key tip: Use AI for verification, not generation, especially close to submission.

What Funders And Review Processes Now Expect

Two policy realities matter before you decide how to use generative AI:

Originality requirements are tightening.

For example, NIH has issued guidance stating that grant proposals “substantially developed by AI, or contain sections substantially developed by AI” will not be considered, and applicants remain responsible for ensuring submissions reflect their own original ideas.

Confidentiality is not optional.

For example, NSF has warned that uploading grant proposal content into public generative AI tools can violate confidentiality and undermine the integrity of merit review.

Practical takeaway: the safest, most defensible use of AI technology is to interrogate your draft for structure and consistency. By applying Clear Rules for AI Edge Cases—even those originally designed for the classroom or manuscript—you can ensure your use of templates or structural checks stays on the right side of agency policy while you maintain ownership of the core scientific content.

Replace “AI Writing” With “AI Stress-Testing”

If you want AI technology to help at the pre-submission stage of your grant proposal, focus on checks reviewers care about:

Completeness checks: missing risk mitigation plan, unclear timeline logic, absent data management language, undefined success criteria.

Consistency checks: aim phrasing matches approach steps, outcomes match impact claims, terminology stays stable across sections.

Evaluability checks: each aim produces an observable deliverable, each claim has a traceable evidence path, scope is bounded to the project period.

This approach also reduces the “generic, frictionless” tone reviewers increasingly associate with machine-written text, without forcing you into disclosure dilemmas about authorship.

Stress-test your logic by verifying that your aims and methodology align with the requirements of the specific call for proposals.

A Simple Responsible-Use Workflow You Can Follow

Protect confidentiality first. Do not paste proprietary methods, unpublished results, or full grant proposal text into public tools, and follow sponsor guidance on data handling.

Keep the intellectual work yours. Use tools for checking, outlining, and consistency, not for generating aims, novelty claims, or citations you have not verified.

Keep an evidence trail. Save a short record of what the tool was used for (for example, “structure check,” “missing components”), and make sure the final language reflects your own domain-specific reasoning.

Where thesify Fits In A Reviewer-First Pre-Submission Check

If you use a platform like thesify, use it the way a strong internal reviewer would, as a structured logic check. Run your draft to identify completeness gaps (missing components reviewers expect) and clarity gaps (components that exist but are hard to evaluate), then revise in your own voice.

A comprehensive view of the thesify structural checklist sections used for a final 60-minute pre-submission audit.

A practical model for using AI tools conservatively is to follow a transparent, limited-use workflow focused on verification and structure.

Last-Minute Grant Proposal Checklist For The Final 60 Minutes

In this section, you will run a fast checklist to catch scoring problems for grant proposals that slip through late-stage edits. To manage this effectively when a document has changed hands, you can adapt the framework from Build a Review Pipeline For Your Work to create a clear plan for what to revise first as the grant deadline approaches.

1) The “So What?” Outcome Line

Write one sentence that states what changes if the project succeeds, in terms a reviewer can score.

Prompt: “If we achieve Aim 1–3, then within the project period we will be able to ___, which enables ___.”

Pass condition: The sentence names an outcome that is concrete (a capability, effect estimate, dataset, tool, decision rule), not a broad aspiration.

Common failure: Impact language that is true in a general sense, but not tied to what your work will actually deliver.

2) Sponsor Keyword Alignment (Without Keyword Stuffing)

Your goal here is to show fit to the call in the sponsor’s own language.

Pull 8–12 phrases from the call text (mission terms, priority areas, required components).

Check that your Summary, Specific Aims, and Significance each include the most relevant terms at least once, where it reads naturally.

Pass condition: Your terminology matches the sponsor’s framing, and you do not rename their priorities with your lab’s jargon.

3) Figure-First Logic Check

Reviewers often use figures and tables as anchors, even in proposals, because visuals reduce cognitive load. Your visuals should support evaluability, not decoration.

Prompt: “Could a reviewer explain the project’s logic in 20 seconds using only the figures?”

Pass condition: At least one visual shows the pipeline from aims to methods to outcomes (even a simple schematic), with labels that match your aim language.

Common failure: Figures are technically impressive but disconnected from the aim structure, so they do not clarify what will be tested and when.

4) Feasibility Proof, Not Feasibility Vibes

Do not leave feasibility implied. Reviewers expect to see explicit cues regarding your project’s capacity, including the specific role of partners and a clear plan for meeting all required criteria..

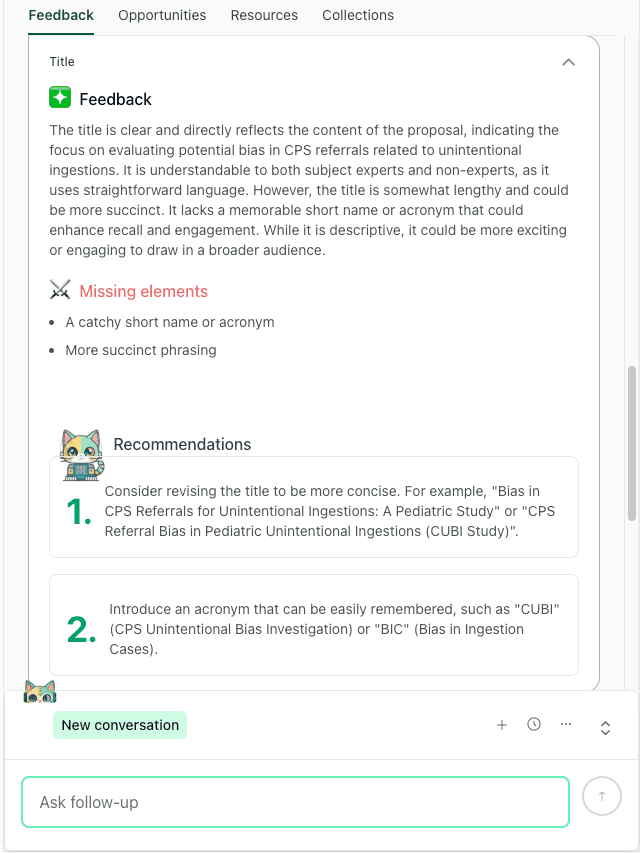

thesify identifying an insufficient partners section and recommending detailed criteria for demonstrating project feasibility.

Ensure every feasibility anchor—including your partnership network—is clearly documented to prove you can complete the work Make it explicit in the one place reviewers expect to see it, your approach logic

If your design relies on effect detection, include a power or sample size justification (even a brief one), plus the key assumptions.

If power is not the central feasibility constraint (for example, qualitative work, engineering builds), include the equivalent feasibility cue (access, throughput, pilot data, validation plan, timeline capacity).

Pass condition: A reviewer can point to one paragraph or table and say, “Yes, they can complete this.”

5) Non-Redundant Innovation Check

This catches a quiet but common weakness: “innovation” that restates “significance.”

Prompt: “Does Innovation answer ‘what is new about the approach,’ not ‘why the topic matters’?”

Pass condition: Innovation names the new method, model, dataset, analytic approach, or conceptual move, and states what it enables that was not previously feasible.

Common failure: Innovation reads like a second significance paragraph with different adjectives.

If you want a structured way to run these checks quickly, you can use thesify to flag repeated section logic and missing components, then revise in your own voice.

Grant Writing Strategy FAQ: Reviewer Questions You Can Preempt

How many specific aims should you include?

Most reviewers respond well when each aim has a distinct decision point and a bounded deliverable. If adding an aim forces you to compress feasibility or blur outcomes, cut it.

What do reviewers mean by “overly ambitious”?

Usually it means your timeline, sample, or method throughput cannot plausibly produce the promised deliverables within tghe project period, given the dependencies you describe. Often, a proposal feels "overly ambitious" or "diffuse" because it includes superfluous components that distract from the core hypothesis. Trimming these non-essential details allows the reviewer to focus on the primary logic chain of your project

thesify identifying non-essential sections in a grant proposal to help researchers improve focus and reduce reviewer cognitive load.

Removing "noise"—such as overly lengthy administrative details—makes your central research "signal" much stronger.

How do you show feasibility without adding pages?

Put one explicit feasibility cue where reviewers look for it, a throughput assumption, access statement, pilot anchor, or a minimal power justification tied to the primary outcome.

How do you avoid AI-sounding grant writing?

Keep discipline-specific details concrete (constraints, assumptions, thresholds, decision rules), and use tools for structure checks rather than generating core scientific claims.

Grant Writing Strategy Takeaways For 2026 Review Panels

In 2026 review panels, the proposals that get advocated for are usually the ones that are easy to evaluate on a first pass. If a reviewer can quickly see what you will do, why it matters, and what success looks like, you have made it easier for them to score you well and defend that score.

If you take one thing from this post, make it this: reduce cognitive friction by keeping a closed loop between your aims, your approach, and your impact claims. When that loop is explicit, your proposal reads as feasible, focused, and worth advocating for in the room.

Ready to move beyond drafting and start stress-testing?

Sign up to thesify for free to use our Grant Finder and Grant Reviewer to ensure your research signal isn’t lost in the noise

Related Posts:

How to Get Feedback on a Grant Proposal Through thesify: Securing grant funding requires more than a great idea; it demands a well‑crafted proposal that aligns with the funder’s criteria and tells a clear story. Many applicants ask colleagues for feedback or consult generic checklists, yet these approaches often lack structure or timeliness.Learn how to prepare, upload and revise a grant proposal using thesify’s new feedback feature. Get section‑by‑section guidance, identify missing components and refine your work.

Pre‑Submission Review vs Peer Review – Key Differences: Knowing the distinction between these reviews affects your publication timeline, workload and expectation. Both reviews serve different purposes and misunderstanding them can waste your time. Get advice on strategic planning of both stages in this article. Plus, learn more about the role of AI in Pre-Submission and Peer Review. Find out more about how publishers are also experimenting with AI on their side of the process. As well as how large journal groups use algorithms to suggest potential reviewers or flag manuscripts that may fall outside a journal’s scope or raise integrity concerns.

AI Tools for Academic Research: Criteria & Best Picks 2025: Academic integrity underpins trust in research. When generative AI tools produce text on your behalf, there is a risk of misrepresenting authorship. Many universities now require students to obtain supervisor approval and disclose any AI assistance. Learn what makes an AI tool truly academic. Discover criteria for data privacy, reproducibility and integrity, plus recommended tools for PhD research.