Comparative Analysis in Research: Matrix Framework

Comparative analysis in research is a structured way to evaluate competing methodologies, approaches, theories, or technologies against a shared set of criteria. It is typically used when you need to justify a research choice, explain trade-offs, or show why an alternative performs differently under specific constraints (data regime, setting, resources, ethics, or governance).

This guide provides a comparative research framework built around a methodological comparison matrix. You will learn how to define comparable units of analysis, select criteria that match your research decision, document evidence for strengths and weaknesses, and write a context-scoped judgement about fit.

If your project requires a defensible rationale for why you chose one approach over another, the matrix-based method below gives you a traceable structure for making that case in a thesis chapter, article, or report.

What Comparative Analysis Means in Research Writing

Comparative analysis in research is a criteria-based evaluation of two or more methods, approaches, theories, or technologies. Each option is assessed against the same standards, using traceable evidence, with explicit scope conditions. The output is a context-specific judgement about fit and trade-offs, rather than parallel description or a universal ranking.

Comparative analysis goes beyond summary—it offers a balanced view of how approaches perform in different contexts.

What You Can Compare (Units of Analysis)

A comparative analysis is most coherent when your units serve the same function in the research design. Common units include:

Methodologies: surveys, interviews, ethnography, case study, experiments, observational designs

Analytical approaches: regression vs matching, QCA vs process tracing, thematic analysis vs grounded theory

Theoretical frameworks: models that aim to explain the same phenomenon or mechanism

Technologies and tools: software, platforms, instruments, pipelines, or measurement technologies used to achieve the same task

When the unit definition is explicit, criteria selection becomes straightforward, and the comparison remains commensurate across options.

Comparative Analysis vs Literature Review vs Systematic Review

This section clarifies comparative analysis vs literature review and distinguishes both from a systematic review, so you can choose the right genre for your research purpose and describe it accurately in your thesis or scientific paper.

Literature Review: Synthesis and Positioning

A literature review synthesises what is known in a field. Its core functions are to:

Map key debates, concepts, and empirical findings

Identify patterns, tensions, and gaps in existing scholarship

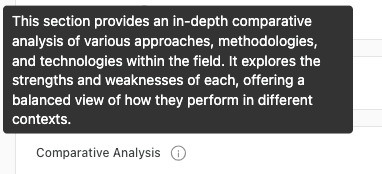

A literature review maps the field. Use thesify’s resource feature to organize key debates before you start selecting specific methods to compare.

Position your research question and contribution within the current state of knowledge

The organising logic is interpretive synthesis. Even when a literature review compares studies, the endpoint is usually a structured account of what the field shows.

Systematic Review: Comprehensive Retrieval and Appraisal

A systematic review is designed to answer a specific research question through transparent, replicable procedures. It typically includes:

Pre-defined inclusion and exclusion criteria

Comprehensive search and screening procedures

Critical appraisal of study quality or risk of bias

Structured synthesis (narrative, quantitative, or meta-analytic where appropriate)

The organising logic is methodological transparency and coverage. The goal is not to justify your method choice, but to produce an evidence-based answer to a defined question using a documented retrieval and appraisal process.

Comparative Analysis in Research: Adjudicating Alternatives Under Constraints

A comparative analysis in research evaluates two or more plausible alternatives, such as methodologies, analytical approaches, theoretical frameworks, or technologies, against a shared set of criteria. It is used to:

Justify a selection (why Option A is a better fit than Option B for this project)

Explain trade-offs (what you gain and lose with each option)

Specify scope conditions (where an option performs well, and where it becomes weak)

The organising logic is criteria-based evaluation, often operationalised through a methodological comparison matrix.

Key Differences: Literature Review Evidence vs Comparative Analysis Function

A comparative analysis often draws on the same body of literature used in your literature review. The difference is the argumentative task:

The literature review builds synthesis and context.

The comparative analysis uses evidence to evaluate alternatives against criteria, in order to justify a decision under constraints.

Benefits of Comparative Analysis in Research

This section explains why comparative analysis is used in academic research writing and gives you rationale statements that work well in proposals, methods chapters, and thesis introductions.

Method justification through explicit evaluation of alternatives

Comparative analysis supports method selection by showing how competing approaches perform against the same criteria. This is particularly useful when you need to justify why an established method was not adopted, or why a newer approach is warranted given your research aims.Context-sensitive decisions rather than generic “best method” claims

Research choices depend on constraints such as data availability, sample size, setting, resources, ethical risk, and governance requirements. Comparative analysis makes those constraints explicit and evaluates options accordingly, which strengthens claims about methodological fit and feasibility.Revealing assumption mismatches before they become design problems

Many methods and technologies embed requirements that are not always compatible with your project, for example measurement precision, data structure, identifiability conditions, computational demands, or access to specific populations. A comparative analysis surfaces these mismatches early and documents them clearly.Identifying gaps and research opportunities

A matrix-based comparison often shows that no existing option satisfies a key set of constraints simultaneously. That finding can justify a methodological adaptation, a hybrid design, a tool development contribution, or a clearly bounded limitation that motivates future research.

Step 1: Define the Decision and Scope Your Units of Analysis

This section shows you how to define a research decision that can be compared rigorously, and how to scope your units of analysis so the comparison stays commensurate across options.

Define the Decision You Are Making

Start by stating the decision in one sentence. Comparative analysis is strongest when it is anchored to a specific choice, for example:

selecting a methodology for data collection

selecting an analytical approach for inference or interpretation

selecting a theoretical framework to explain a mechanism

selecting a technology or tool to implement measurement or analysis

A useful formulation is: “I am choosing X in order to achieve Y under constraints Z.”

This keeps the comparison oriented around purpose rather than preference.

Scope the Comparison to Comparable Units

A comparative analysis becomes difficult to defend when the comparison set is too large or the units operate at different levels. A practical scope is:

2–4 units of analysis (enough to show due diligence without diluting the evaluation)

Same level of analysis, so the criteria are commensurate:

data collection (for example surveys vs interviews)

data analysis (for example regression vs QCA)

interpretation/theory-building (for example two competing theoretical models)

Same outcome target, so you are comparing options designed to solve the same problem:

explanation (causal inference, mechanism testing)

prediction (forecasting, classification)

classification/measurement (coding, detection, instrumentation)

theory-building (concept development, typology construction)

If the units differ in level or outcome target, the criteria you would need to evaluate them will not align, and the matrix will produce misleading trade-offs.

Set Inclusion Criteria for What You Compare

Define the boundaries of your comparison explicitly. Typical inclusion criteria include:

Time window (for example “approaches developed or validated since 2015”)

Domain constraints (population, setting, field-specific standards)

Data type and availability (qualitative interviews, panel data, administrative records, imaging data)

Minimum evidence threshold (peer-reviewed validation, benchmark results, established methodological critique)

Feasibility constraints (compute, cost, access, required expertise)

These criteria prevent scope creep and make your selection defensible, especially when you explain why some alternatives were not included.

You can use Chat with Theo to quickly identify how other studies defined their boundaries, helping you set defensible inclusion criteria for your own matrix.

Mini Template (Copy and Paste)

Decision:

Constraints:

Units included:

Units excluded (and why):

Step 2: Build the Methodological Comparison Matrix

Next, it’s time to construct a methodological comparison matrix that supports evaluating research approaches in a traceable, criteria-based way. This framework is the core asset of a comparative analysis because it keeps the comparison commensurate across options and forces your claims to be explicit.

Derive Criteria From Constraints and Standards

Your criteria should be derived from what your project needs to achieve and what your field treats as legitimate evaluation standards. Start by anchoring criteria to the following sources, then translate them into measurable or clearly definable categories for your matrix.

Research question and inferential goal

Define what the method or approach is being asked to do. Criteria differ depending on whether your goal is explanation, prediction, classification, measurement, or theory-building.Data regime (type, quality, missingness, measurement)

Specify the structure and limitations of the data you have or can realistically obtain. Criteria commonly include minimum sample size, tolerance for missingness, measurement error sensitivity, and compatibility with qualitative versus quantitative evidence.Validity priorities and bias sensitivity

Identify which validity claims your study must support and which bias risks are plausible in your setting. Relevant criteria might include internal validity, external validity, ecological validity, robustness to confounding, and vulnerability to selection or measurement bias.Feasibility constraints (skills, compute, timeline)

Criteria should reflect what can be implemented within the project’s operational limits, including technical expertise, computational requirements, time to execution, and cost.Ethics and governance (consent, privacy, risk)

Where relevant, include criteria for participant burden, privacy and identifiability risk, consent complexity, data governance requirements, and downstream ethical implications.

A useful check is whether each criterion helps answer the decision defined in Step 1. If a criterion does not affect selection, it does not belong in the matrix.

Add Warrants (Why Each Criterion Matters)

A methodological comparison matrix is strongest when it includes a short justification for why each criterion is relevant to your specific research decision. These warrants prevent the matrix from looking generic and make the evaluation legible to supervisors, reviewers, committee members, or review panels.

For each criterion, write one sentence in one of the following forms:

“This criterion matters because…”

“This criterion is binding in this project because…”

Example warrants (for illustration):

Sample size requirements: This criterion matters because the available dataset limits statistical power and affects the stability of estimates.

Interpretability: This criterion is binding in this project because the analysis must be defensible to a non-technical stakeholder audience.

Privacy risk: This criterion matters because the data include potentially identifiable information governed by strict access and storage requirements.

When warrants are explicit, your comparative analysis reads as decision logic rather than a list of preferences, and your final verdict becomes easier to justify as context-dependent methodological fit.

Step 3: Populate the Matrix With Comparable Evidence

This step requires filling in your methodological comparison matrix using evidence that is comparable across methods, approaches, or technologies. The goal is to support evaluative claims with traceable sources.

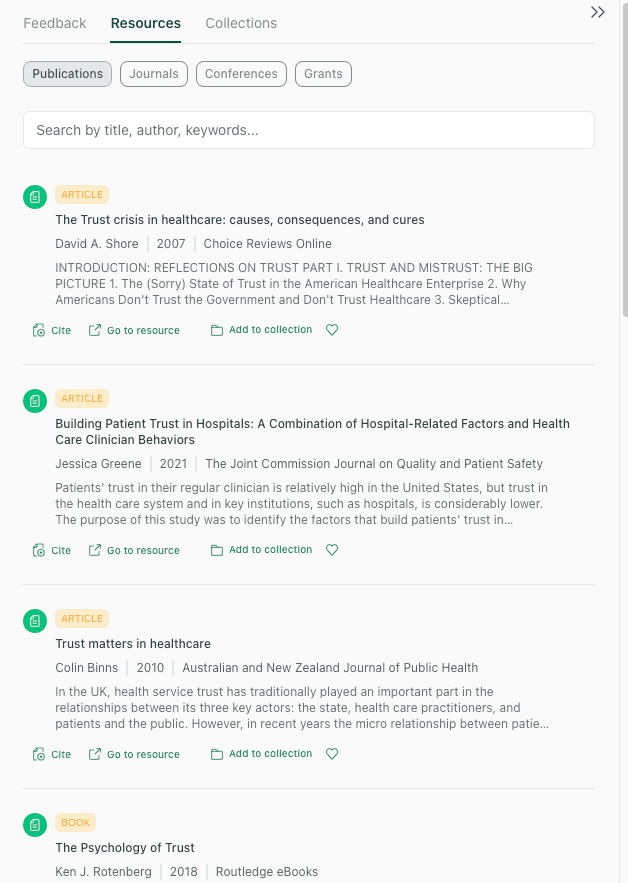

Extracting main claims directly allows you to populate your matrix with precise findings rather than vague summaries.

Evidence Types That Support Comparative Analysis in Research

Prioritise evidence that makes constraints, performance, and failure modes explicit. Common evidence types include:

Benchmarks and comparative evaluations (including head-to-head studies where available)

Validation studies (measurement validity, robustness checks, external validation)

Methodological critiques and review articles (especially those specifying assumptions and known limitations)

Field standards and reporting guidelines (discipline-specific norms, required checks, quality criteria)

Limitations sections and “threats to validity” discussions in foundational papers (often the most direct source for scope conditions)

When you extract limitations and scope conditions, it helps to use a structured rubric that flags missing reporting details and overclaims, which is exactly what thesify’s in-depth Methods, Results, and Discussion feedback is designed to surface.

Automated feedback from thesify can flag missing reporting details in your source papers (or your own draft), ensuring you don't build your comparison on incomplete evidence.

When evidence quality varies across options, note that explicitly. Uneven evidence bases are common and can be handled transparently in the matrix.

Extraction Approach: Keep Notes Commensurate Across Criteria

Populate the matrix criterion-by-criterion rather than paper-by-paper. This reduces drift and ensures each option is assessed against the same standards.

Use standardised notes per criterion

For each criterion, extract the same categories of information for every option (for example: stated assumptions, required data properties, typical sample sizes, documented failure conditions, and reporting requirements). Write notes in a consistent format so differences are interpretable.

thesify’s Digest feature isolates the core methodology (e.g., Qualitative Analysis) so you can quickly categorize each paper in your matrix.

Record uncertainty explicitly

Comparative analysis benefits from precision about what is known versus inferred. Use clear labels such as:Unknown: the source does not report this information

Contested: studies disagree, evidence base is mixed

Context-specific: performance depends on setting, population, or data regime

Inference: a reasoned implication based on stated assumptions (label it as such)

Managing the Cognitive Load of Cross-Reading Dense Sources

Comparative work often fails at the extraction stage because the reader is forced to hold details from one paper in working memory while reading another. That increases the risk of inconsistent note-taking, over-reliance on recall, and “false equivalence” across options. A comparison table reduces this problem only if the evidence is extracted in a controlled, repeatable way.

Practical Side-by-Side Workflow

A practical way to reduce cognitive load is to review foundational sources side-by-side while extracting matrix evidence. In thesify, you can quickly review a paper’s main methodology using thesify’s Digest feature and pull comparable information into your criterion notes as you read.

The Digest feature isolates core methodological details—like design type and analysis—so you can populate your matrix criteria without getting lost in the full text.

When populating the matrix, prioritise extraction of:

Assumptions (what must be true for the method or approach to work as claimed)

Limitations and scope conditions (where it performs well, where it weakens)

Data requirements (structure, scale, quality, measurement)

Failure modes and instability points (where results become unreliable or biased)

Try asking using the Chat with Theo feature to extract evidence that maps directly to matrix columns:

“Extract scope conditions stated by each approach.”

“List assumptions required for identification or validity.”

“Compare where each approach is known to fail or become unstable.”

Use Chat with Theo to extract specific findings or distinctions directly, saving you from hunting through dense results sections.

Keep the outputs tied to specific criteria and sources, then translate them into concise matrix entries with citations or traceable notes.

Step 4: Make Evaluative Claims and Write the Context-Dependent Verdict

This step requires moving from a completed methodological comparison matrix to an argument that justifies selection. The focus is on evaluative language that makes trade-offs and scope conditions explicit, which is the core of comparative analysis in research.

If you want a check on whether your comparative claims are appropriately scoped, thesify’s in-depth Methods, Results, and Discussion feedback flags overclaiming and missing qualifiers in the exact sections where these issues typically surface.

Before finalizing your verdict, use thesify’s feedback to ensure your claims match your evidence—preventing the "overclaiming" trap common in comparative analysis.

Replace Global Rankings With Scoped Judgements

A comparative analysis rarely supports a universal ranking. The more defensible approach is a scoped judgement that ties the verdict to the constraints and decision defined in Step 1.

Use sentence structures that keep the logic visible:

“Under constraints X, Option A is preferable because…”

This formulation forces you to name the constraints that drive the decision (for example data regime, feasibility limits, ethical risk, required validity claims), then justify the conclusion using matrix evidence.“If constraint Y changes, the ordering reverses because…”

This is a concise way to show sensitivity to context. It demonstrates that your judgement is not arbitrary, and that it follows from criteria rather than preference.Make boundary conditions explicit

For each option, state the conditions under which it becomes weak, unreliable, or inappropriate. Boundary conditions are typically drawn directly from the matrix entries on assumptions, data requirements, and failure modes.

A practical way to operationalise this in your writing is to treat each criterion as a mini-claim:

criterion → evidence for A and B → implication under your constraints → interim scoped conclusion.

Use Reciprocal Illumination to Deepen the Argument

Reciprocal illumination is a comparative technique where each option is used to reveal the blind spots of the other. It strengthens the analysis by showing that the evaluation is not a list of isolated attributes, but a structured account of what each approach foregrounds and what it obscures—a strategy central to writing with depth and perspective through debate.

Use thesify’s feedback to synthesize complex findings across papers, helping you see the "big picture" trade-offs before you write your final verdict.

Common trade-offs to map explicitly include:

Interpretability vs performance

Higher-performing approaches may be harder to interpret or justify, particularly when transparency is required for stakeholder-facing claims.Internal validity vs ecological validity

Designs that maximise control can reduce real-world relevance, while naturalistic designs can increase contextual realism but introduce additional bias risks.Scalability vs richness

Methods that scale to large samples often reduce granularity, while high-resolution approaches can become infeasible or non-representative at scale.Flexibility vs identifiability

Flexible models and frameworks can accommodate complexity, but the assumptions required to identify effects or mechanisms may become harder to defend.

When you write the verdict, use these trade-offs to explain why an apparent weakness can be acceptable, or why a strength can be irrelevant, given your project constraints. This keeps the verdict context-dependent and makes the comparative logic legible to the reader.

What Makes a Comparative Analysis Strong?

This section provides a supervisor-ready checklist you can use as a pre-submission audit for a comparative analysis in research, especially when you are presenting a methodological comparison matrix and a context-dependent verdict. A useful final check is whether the section reads as evaluation (criteria, evidence, scope conditions) rather than descriptive commentary.

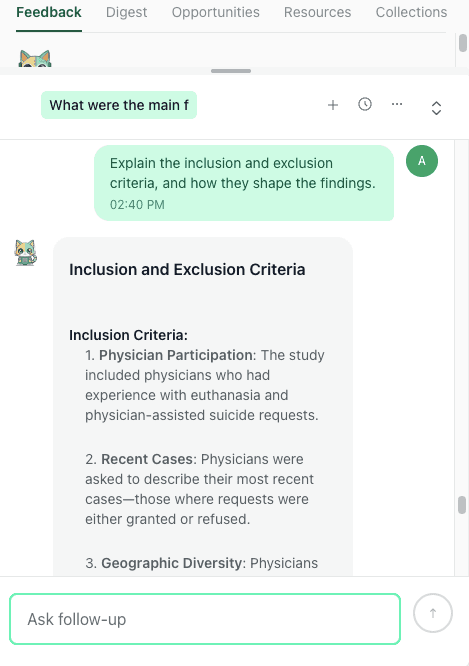

Example of thesify’s Feedback view, illustrating the level of structured evaluation you can use to audit clarity and argument support.

Supervisor-Ready Comparative Analysis Checklist

Criteria are justified with warrants tied to project constraints

Each criterion in your comparative research framework should be linked to a constraint or requirement of the study (data regime, inferential goal, feasibility, ethics, governance). Warrants should be stated explicitly, not implied.Claims are evidence-backed and traceable

Every judgement in the methodological comparison matrix should be supported by citations or documented extraction notes (benchmarks, validation studies, standards, or limitations sections). Avoid unreferenced generalisations (for example “commonly used” or “more robust”) unless you specify the evidential basis.

thesify’s feedback checks if your figures and data are properly integrated into your argument, ensuring your comparative verdict is fully supported by the evidence.

Assumptions and failure modes are explicit

A strong comparative analysis identifies the assumptions that must hold for each option and documents where each option is known to fail, become unstable, or introduce bias. These scope conditions should be visible in the matrix and carried into the written verdict.The verdict is scoped, not universal

The conclusion should specify the constraints under which the preferred option is the best fit. If the ordering would change under different constraints, state that explicitly.Alternatives are represented fairly (no straw-manning)

Each option should be described in terms its proponents would recognise, including its intended use-case and strengths. Balance is demonstrated through accurate characterisation and evidence, not symmetry.Limitations of the comparison are stated

Note where the evidence base is uneven, where criteria are difficult to operationalise, or where options are not fully commensurate. Stating these limits strengthens credibility and prevents overclaiming.

How to Write the Comparative Analysis Section (Thesis and Paper)

This section explains how to structure a comparative analysis in research so it reads as evaluation. The options below align the writing structure with the logic of a methodological comparison matrix, which improves analytic clarity and makes the final verdict easier to justify.

Point-by-Point (Criterion-Led) Structure

This structure organises the section by comparison criteria, assessing each option against the same criterion before moving to the next. It is typically the strongest format for methodological justification because it keeps the evaluation commensurate.

Use it when:

your criteria are clearly defined and stable across options

you need to show trade-offs explicitly (fit under constraints)

you want the comparative logic to be visible to the reader

How it works:

Criterion 1 (with warrant) → evidence for Option A → evidence for Option B → scoped implication

Criterion 2 → repeat

Synthesis and context-dependent verdict at the end

This format supports evaluating research approaches directly and reduces the risk of drifting into separate mini literature reviews.

Block Structure With an Explicit Synthesis Layer

The block structure presents Option A as a coherent block, then Option B, followed by a comparative synthesis. It can be acceptable, but only when the synthesis is explicitly mapped back to criteria.

Use it when:

each option requires a short technical exposition before evaluation is meaningful

your audience needs conceptual grounding before you compare

the options are complex enough that criterion-led writing would become fragmented

To keep it rigorous:

End each block with a brief “criterion mapping” paragraph that signals how the option performs against the matrix criteria.

In the synthesis layer, structure the comparison by criteria, not by narrative flow.

Without an explicit synthesis layer tied to criteria, the block approach often reads as two summaries with an under-specified conclusion.

Reusable Paragraph Skeleton for Comparative Analysis Writing

Use the following paragraph structure within a criterion-led or synthesis section. It is designed to keep warrants, evidence, and scope conditions visible.

Criterion + warrant: State the criterion and why it matters for your project constraints.

Evidence for Option A: Provide the relevant claim with citation or traceable note.

Evidence for Option B: Provide the parallel claim with citation or traceable note.

Implication under constraints: Explain what the difference means for your research decision.

Interim verdict + scope condition: State a conditional judgement and when it would change.

You can reuse this skeleton across criteria to produce a consistent comparative analysis section that matches the logic of your methodological comparison matrix and supports a context-dependent final verdict.

A final check is whether the comparative paragraph advances the paper’s central claim rather than accumulating criteria without argumentative payoff.

Use a thesis support check in thesify to confirm your comparative section reinforces your main argument, not a parallel analysis track.

Common Failure Modes in Comparative Analyses in Research

Invalid unit comparisons (mixed levels of analysis)

A frequent issue is comparing units that do not sit at the same level, for example a data collection method against an analytical approach, or a theoretical framework against a software tool. When levels are mixed, the criteria become non-commensurate and the verdict becomes difficult to justify.Criteria not aligned with the decision

Criteria need to follow from the decision defined in Step 1. If the decision is about causal explanation, prediction-focused criteria will distort the comparison. If the decision is about feasibility in a constrained setting, performance-only criteria will misrepresent fit.Hidden assumptions, especially around data and identifiability

Many comparisons are undermined by unstated requirements such as measurement precision, missingness mechanisms, independence assumptions, model identifiability, or access to specific populations. A strong comparison makes these assumptions explicit and treats them as evaluative criteria where relevant.No boundary cases (overconfident claims)

Comparative analyses often fail when conclusions are written as universal rankings rather than context-scoped judgements. Boundary conditions should be stated clearly, including where an approach becomes unstable, biased, infeasible, or ethically problematic.“Balance” treated as symmetry rather than accurate representation

Balance entails representing each option fairly, in its intended use-case, supported by evidence. Where the evidence base is uneven or one option is clearly weaker under the project constraints, the analysis should reflect that directly.

FAQs About Comparative Analysis in Research

How many approaches should I compare in a PhD thesis?

In most cases, two to four approaches is a defensible range. It is enough to demonstrate due diligence and to support method justification, while keeping the methodological comparison matrix commensurate and evidence-based. If you include more than four, the comparison often becomes shallow unless you narrow the criteria set or restrict scope (for example to one data regime or one outcome target).

How do I justify my comparison criteria?

Justify criteria by linking each one to your research decision and constraints. A clear method is to add a one-sentence warrant for each criterion, for example: “This criterion matters because…”.

Strong warrants typically reference:

your inferential goal (explanation, prediction, theory-building)

your data regime (type, quality, missingness, measurement)

validity priorities and bias sensitivity

feasibility limits (skills, compute, timeline)

ethics and governance requirements (consent, privacy, risk)

Can I compare qualitative and quantitative approaches?

Yes, but the comparison needs carefully defined units of analysis and criteria that are genuinely commensurate. For mixed comparisons, criteria often need to focus on fit-for-purpose dimensions such as:

the type of claim each approach can support

assumptions and validity risks

feasibility and participant burden

interpretability and auditability (clarifying what each approach makes visible versus what it obscures)

What is the difference between comparative analysis and a systematic review?

A systematic review is designed to answer a research question through transparent, replicable retrieval and appraisal of the evidence base. A comparative analysis in research evaluates plausible alternatives (methods, approaches, theories, technologies) against shared criteria to justify selection and explain trade-offs under constraints. A comparative analysis can draw on evidence identified through a literature review or systematic review, but its output is a criteria-based verdict about fit, not a comprehensive synthesis of study findings.

What should go in the comparative matrix evidence column?

The evidence column should contain traceable support for each matrix claim, preferably with citations.

Typical entries include:

benchmark or comparative evaluation findings

validation study results or robustness evidence

documented assumptions and limitations from foundational papers

methodological critiques or standards relevant to the criterion

notes on uncertainty (unknown, contested, context-specific): if a claim is based on inference rather than direct evidence, label it explicitly and state the basis for the inference.

Next Step: Build Your Comparison Matrix Faster in thesify

If you are preparing a comparative analysis, you can use thesify to reduce the overhead of cross-reading dense methods papers. Create a free account, upload the key sources you are comparing, and use the side-by-side view to extract assumptions, limitations, and scope conditions directly into your methodological comparison matrix.

Related Resources

Step-by-Step Guide: How to Write a Literature Review: A well-executed literature review serves as a map of existing knowledge, allowing you to determine what has been studied, what remains unresolved, and where your work fits within ongoing academic discussions. It guides your research process by refining focus, shaping methodologies, and ensuring engagement with the most relevant studies. Reviewing literature isn’t just about identifying gaps—it also helps you recognize emerging trends, evolving theories, and shifting methodological approaches. Use this guide to help you track shifts in your field.

How to Get Feedback on Your Methods Section Using thesify: A well‑written methods section is the backbone of a reproducible scientific paper. Journals and research guides remind us that methods sections should contain enough detail for a suitably skilled researcher to replicate the study. They should clearly explain the study design, sampling, data collection and analysis so that readers can judge internal and external validity. In this post you will learn how to use thesify’s section‑level feedback to review and improve your methods section.

How to Evaluate Academic Papers: Decide What to Read, Cite, or Publish: If you’re faced with a stack of new journal articles, you know that feeling of overwhelm – so many papers, so little time. Learn a smarter way to decide which papers to read in depth with quick evaluation techniques. This article includes a quick checklist for screening papers you can print out or keep by your desk. Learn how to Scan the Abstract and Conclusion – do they align with your information needs?, Check Author and Journal – is it peer-reviewed and reputable?, and review Key Results – are the findings novel or significant (as far as you can tell)?.